- Регистрация

- 25.01.2012

- Сообщения

- 1 068

- Благодарностей

- 257

- Баллы

- 83

I use luminati proxies and they charge about $15/GB. So if I create e.g. 1k gmx accounts it would cost me about $45 if I load all the elements on their sign up page and cache nothing (not to mention the shitty ads and "news" on their main site if you want to make it more legit). Already tried to disable all the images and unnecessary scripts on the site but traffic per account remains still at about 1MB.

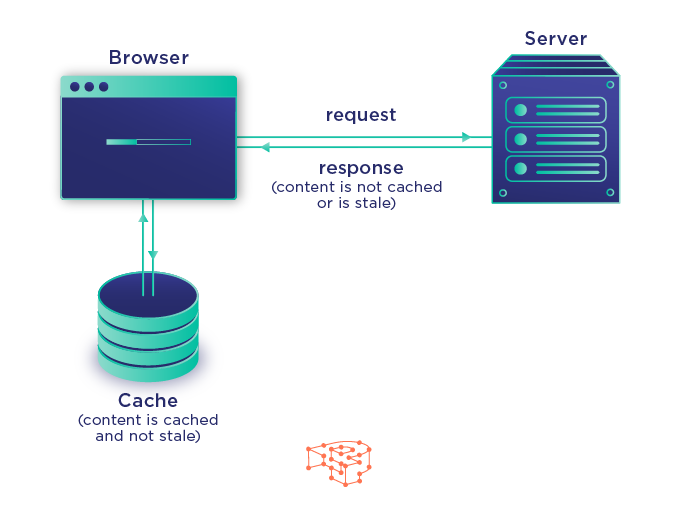

I think if I can cache all the elements that remain the same on every sign up (but avoid the fingerprinting-/tracking ones that need to be loaded again every time) that I can reduce the cost to 25%. But I don't know how to correctly cache all the needed things and reload the other ones in ZP...

Maybe there is an software out there that works like AdGuard (an intercepting "proxy" server that filters out advertisement from your surfing traffic). Working as an indermediate that caches most of the elements on specified websites and gives the opportunity to (re-)load the needed things, so saving most of the normally needed bandwith. Read something about Squid, but seems too complicated to configure, so an easy to set up solution would be preferred.

Thank you guys for your appreciated help!

I think if I can cache all the elements that remain the same on every sign up (but avoid the fingerprinting-/tracking ones that need to be loaded again every time) that I can reduce the cost to 25%. But I don't know how to correctly cache all the needed things and reload the other ones in ZP...

Maybe there is an software out there that works like AdGuard (an intercepting "proxy" server that filters out advertisement from your surfing traffic). Working as an indermediate that caches most of the elements on specified websites and gives the opportunity to (re-)load the needed things, so saving most of the normally needed bandwith. Read something about Squid, but seems too complicated to configure, so an easy to set up solution would be preferred.

Thank you guys for your appreciated help!